-

As cybercriminals improve their methods, so too does the cybersecurity industry – the arms race between the two isn't news. But here's what is – one of the earliest and most developed examples of artificial intelligence we have in the world might finally give protection from cyberthreats the edge we've been waiting for.

Cybersecurity used to be a very either/or affair. A piece of malware appeared and cybersecurity vendors quickly issued updates to warn your local internet security application to watch for it. If they were fast enough, you caught it before it caught you.

"The AI learns, building up an ever-more refined and accurate set of rules and experiences it can apply to future incidents."

Drew Turney, Ex-graphic and web designer turned journalistThen came a new kind of threat detection. Called anything from predictive to heuristic, cybersecurity could now take a reasonably good guess about which programs or processes were from the bad guys by signaling their similarity to other digital artefacts it already knew were bad news.

Let's say a virus can be expressed as '12345'. The software can be told to treat a file containing '12354' or just '123' as suspect, flagging it for further checking. But it's about much more than file types. Today cyberthreats can be found in anything from user behaviour to social engineering.

{CF_IMAGE}

AI ON THE CASE

{CF_IMAGE}

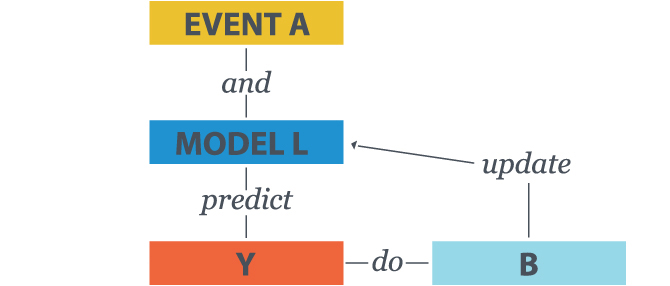

The task facing artificial intelligence cybersecurity agents is to watch for a program, user behaviour or pattern of network traffic that seems 'off', just like heuristic scanning.

What makes an AI software agent stand apart is that it not only has to action the threat but incorporate the lesson learned back into its memory banks – without the need for a human programmer to 'hard code' new rules or red flags to watch for.

The AI learns, building up an ever-more refined and accurate set of rules and experiences it can apply to future incidents.

In the below example, Event A and existing Model L can predict outcome Y. The AI agent will enact the action B and refine Model L for the next event.

{CF_IMAGE}

WHERE THE ACTION IS

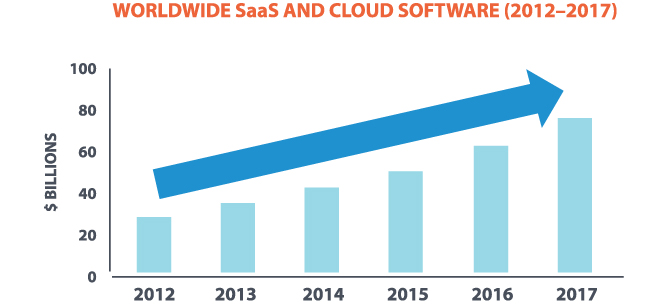

Given the speed and accuracy at which AI algorithms can improve their performance, their natural home is where the data is – online. Cloud computing networks and services are producing, storing and managing more data than we've ever had to deal with before. Here's how much the field of just one component – software as a service (Saas) is set to grow.

{CF_IMAGE}

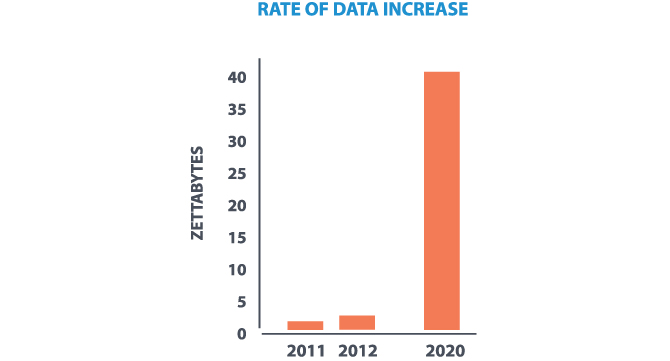

According to a study by industry analysis firm IDC, the human race has generated 1.8 zettabytes (or 1.8 trillion GBs) of electronic data. In 2012 the figure was 2.8 zettabytes. In the year 2020, according to the company, it will reach a whopping 40 zettabytes.

{CF_IMAGE}

All that data contains a wealth of information and insight into where malware and cyberthreats are coming from, how it tricks us and spreads, and the damage it can do. We're producing all that information in far higher volumes and at greater speeds than mere humans can hope to process – let alone scour for red flags. That's where AI comes in.

Take the example from above. Your security application flags '12354' because it already knows '12345' is a digital nasty and scales it up to code millions of lines long or the logins and online activity of millions of users.

How much activity? Every minute we produce over 200 million emails, 2 million Google queries, 48 hours of YouTube videos, 684,000 Facebook posts, over 100,000 tweets and spend nearly $A390,000 on consumer ecommerce.

AI is important at the big data level because of volume. When there are hundreds or thousands of data actions in a given period but only one or two of them are nefarious – or even suspect – it doesn't give AI algorithms much fodder to teach themselves something new.

It's only at the global scale of big data and cloud computing where such algorithms get millions or billions of opportunities to spot nasties because they've been scanning exabytes of data or the unfathomable number of actions they contain.

It's like a police officer wearing Google Glass or some other augmented reality tool. Despite his training to watch for shifty behaviour, he only has two eyes and one brain like the rest of us.

Augmented reality data might tell him if the suspect knows he's been spotted and is likely to change his behaviour, whether he should move in or wait for backup and dozens of other data points or 'signals' he can't possibly know just from looking.

When cybersecurity protection has access to the databases and datasets of the big data world, it's like the same policeman looking at a suspect or incident and being presented with all the associated context he could want to make a decision;

- Where the suspects have been before (geographic region)

- Whether they have prior offences (the same administrative contact as that of a URL that was previously compromised)

- If it's a statistically dangerous neighbourhood (ie with lots of links to piracy or pornography sites)

BEHAVIOURAL ANOMALIES

A human manager isn't likely to count the number of emails you send from your work account every day – in this privacy-aware day and age they'd be asking for a lawsuit if they did.

But a machine learning algorithm can build up a profile on user behaviour and be ready when a given activity doesn't fit. In one example a user sent 2,000 emails in a day when the normal level for his job function was 15. The system – which had built up a fair idea of how many emails certain employees normally send – notified managers after deciding something was up all by itself.

When they realised the employee had sent so many emails on his last day with the company, it was cause to investigate further in case he'd been transmitting confidential data or restricted CRM records to give him a leg-up in his next job.

Machine learning algorithms are very good at all the minute details about what time you log in to your work intranet, what database you usually access to perform your job, numbers you usually call, etc.

SOFTWARE BEHAVIOUR

AI algorithms are also really good at a process called 'at scale feature extraction'.

Scanning every line of code in a program consumes processing resources and takes time. Some malware can be released in hundreds of thousands of variants – too many for your internet security application to scan and quarantine when time is of the essence.

It's much more efficient to let a program reveal features of what it does like opening documents, copying itself, accessing kernel files (the critical information that runs your computer), etc.

AI is great at abstracting software behaviour into features like the above because it can teach itself about how code in other programs performs similar actions and get a much clearer (and faster) picture about what the program does, which in turn gives it a much more accurate clue about its intentions.

THE NEW ARMS RACE

It used to be that a virus was spotted, virus definitions were updated and disseminated, then a new virus came along based on what the malware creators knew your Internet security application was now blocking.

Today virus definitions, software behaviour and user activity patterns are all collected and synthesised on AI supercomputers deep in the bowels of the cybersecurity providers. Now when they spot, pick apart and issue warnings about new threats, they do it in secret.

Where malware protection happened to some extent in plain sight, AI methods are far more opaque, using algorithms that are as agile as they are secret. Without the easy access they used to have to the engineering of threat prevention, cybercriminals don't really know what they're combating.

Drew Turney is a graphic designer and journalist. Illustrations and info graphics by Bird Studios.

The views and opinions expressed in this communication are those of the author and may not necessarily state or reflect those of ANZ.

-

-

-

-

-

anzcomau:Bluenotes/technology-innovation,anzcomau:Bluenotes/technology-innovation/digital,anzcomau:Bluenotes/technology-innovation/disruption,anzcomau:Bluenotes/technology-innovation/tech

AI agents: the next step in cybersecurity

2015-11-26

/content/dam/anzcomau/bluenotes/images/articles/2015/November/0_featimagelarge.jpg